Index and Search Your Data Seamlessly with LlamaIndex and Large Language Models

Table of Contents

Introduction

Large language models (LLMs) like ChatGPT have a human-like ability to understand languages, enabling them to respond to questions and prompts in a manner that resembles a human conversation.

Despite this impressive ability, there is always a risk that an LLM will fail to provide a relevant and accurate answer. This can be attributed to various factors, primarily the limited, and sometimes the outdated, nature of the data on which the LLM was trained on, but also the stochastic nature of the algorithms they employ.

As an example, ChatGPT will not be able to provide relevant answers when the question concerns private data that the language model has not been trained on.

In such cases, LLMs can be fine-tuned using the private data to achieve more accurate responses. However, fine-tuning can be a resource intensive and costly operation, and has to be done again whenever the data is updated. Fine-tuning might also cause problems such as overfitting.

Often a more effective and cost-efficient approach involves prompt engineering and storing data locally before sending it to the LLM.

Storing data locally and utilizing similarity search enables the retrieval of relevant portions of the data at lower costs. These ”chunks” can be provided to the language model as context, referred to as context-augmented generation, within the prompt.

The language model can comprehend these examples and generate a more accurate and relevant response by leveraging this context.

This is where LlamaIndex comes into play. Also sometimes referred to as GPT Index, LlamaIndex helps you connect Large Language Models (LLMs) with your data. LlamaIndex offers a comprehensive set of tools and best-practices specifically designed for efficient and cost-effective downloading, indexing, and querying of your data.

This article is based on LlamaIndex version 0.6.

See Essential Concepts for Comprehending Large Language Models (LLMs) for an introduction to LLMs.

LlamaIndex Workflow

The LlamaIndex workflow is composed of several steps. Thanks to the well-designed API, most of these steps are hidden to the end user. However, should there be a need, the API offers considerable flexibility, including the option to customize each step according to specific requirements.

Data Loading

First, I retrieve the data to be indexed and convert it to documents using LlamaIndex.

Data Processing

Next, I process the data. This includes parsing the document into nodes, in other words, chunks of text, extracting keywords, etc.

Data Indexing

With the data loaded and processed, I use LlamaIndex to index the data. This enables search and retrieval of nodes that are relevant to the query.

Querying

The last step is to execute a query. Behind the scenes, the query is run against the index to retrieve documents and nodes relevant to the query. An LLM model is used to generate the final response.

Optimization

LlamaIndex can optionally be used to optimize the quality of responses and reduce usage costs.

Core Concepts

LlamaIndex offers a variety of features and data structures that simplify the development and maintenance of LLM-based applications. These features allow efficient organization and querying of information for large language models. The core features provided by LlamaIndex include:

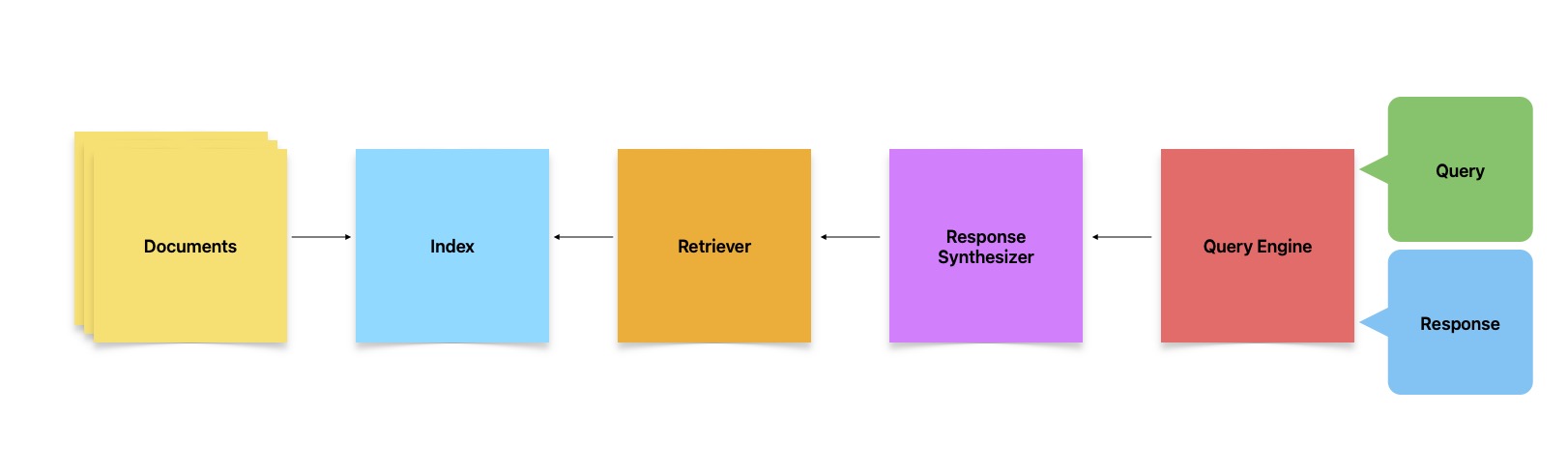

Index

An index allows for efficient queries, most often some type of similarity search, to be run on your data. The indexing process transforms chunks of data or entire documents into numerical vector representations.

Retriever

A retriever is used to retrieve nodes from an index.

Response Synthesizer

The response synthesizer generates the final response. Response synthesis can optionally include post processors and various optimizers to improve response quality and reduce costs.

Query Engine

The query engine serves as the interface and acts as a bridge between our data and the LLM. It allows us to pose questions through prompts and receive answers from the LLM.

Every call to a query engine not only generates a synthesized response but also provides the source documents so that we can perform fact checking of the response.

Data Structures

Behind LlamaIndex, there is a collection of data structures that enable its functionality, these are some of the more important ones:

Data Loader

LlamaIndex's data connectors, also known as loaders, streamline the process of loading data from online sources, such as Google Docs and Slack, and offline sources, such as text files and PDF files.

A list of LlamaIndex loaders can be found online at llamahub.ai.

Document

Documents hold the raw data, for example, the text and metadata of one or more pages of a PDF file.

Node

Nodes are chunks of the documents such as words, sentences, and paragraphs. Due to prompt size constraints, often referred to as ”context window size”, it's not possible to feed large documents to the LLMs. One solution is to break the document down into smaller pieces, a process known as ”chunking”. The GPT-4 model, for example, has a context window size of 32,000 tokens, with each token representing a character or word.

Token

A token is the base unit, a character, word, or sentence, that the LLM model is able to work with.

A tokenizer is responsible for splitting the text into tokens.

Embeddings

In the field of natural language processing, an embedding refers to a vector, which can be thought of as a list of numbers. This vector representation of a word or a sequence of words captures its meaning and grammar in a way that enables the model to process and comprehend the text.

Prompts

Interaction with an LLM model is done through a prompt. This is why writing efficient prompts, often referred to as Prompt Engineering, is the key to getting the most out of LLM models. LlamaIndex includes prompt templates that follow best practices and also allows you to customize them to your needs.

Prompt Engineering

The quality of the prompts is crucial to the effectiveness of an LLM.

Prompt engineering involves designing effective prompts (input) for Large Language Models (LLMs) to improve their responses (output).

For instance, an effective prompt that guides the LLM towards a correct answer may include context, facts, and specify what to avoid or include in the response.

LlamaIndex provides a range of prompt templates optimized for various tasks such as question-answering, prompt refinement, keyword extraction, code generation, and more.

Prompt engineering is an evolving field of research, with new techniques being continually developed.

Model

In addition to the the prompt, the choice of LLM model plays an important role in the response quality. Different models have distinct characteristics and strengths, which affect their performance and the quality of the responses they are able to generate.

For example, OpenAI's text-davinci-003 model for ChatGPT is a cost-efficient option and serves as the default model employed by LlamaIndex. However, the optimal model depends on the use case. OpenAI's Codex model, for example, is optimized for generating and understanding code.

With LlamaIndex you can choose a different, non-default, LLM model when constructing an index.

Evaluating the performance of a model for your specific use case is a crucial aspect of developing applications that leverage language models.

Vector Store

Vectors, referred to as embeddings in the context of Large Language Models, are numerical representations of the nodes and tokens that Large Language Models are able to understand and process. These are not features of LlamaIndex per se, but fundamental concepts used in machine learning and the development of LLM models.

Vectors, along with metadata, are most often stored in-memory or in a vector database.

Vector stores enable fast and efficient retrieval of text (embeddings) relevant to the prompt from large datasets that the language model has not been trained on.

They achieve this through the following key features, which are essential in the development of LLM-based applications:

- Vector storage: Vector stores provide a mechanism to store and organize vectors (embeddings) efficiently.

- Indexing capabilities: Vector stores offer indexing capabilities, which makes vector searching and retrieval faster.

- Similarity search: Vector stores enable similarity search, which involves finding vectors that are most similar to a given query vector.

LlamaIndex provides supports many popular vector stores, including, Milvus, Redis, Pinecone, etc.

Similarity Search

Vector stores use various search algorithms, such as ANN (Approximate Nearest Neighbors), to find text that is similar to the query prompt.

These text chunks are then incorporated as context within the prompt. By doing so, the LLM can use the provided context to generate a more informed and accurate response to the prompt.

LlamaIndex supports several ANN libraries, including FAISS, Annoy, and HNSW. These libraries enable efficient and accurate approximate nearest neighbor search operations.

Index & Index Composability

LlamaIndex offers many types of indices, each designed to store nodes in distinct ways and execute data queries using different approaches.

When the corpus of data is large, using similarity search alone on one index may not be sufficient to find the most relevant data. This is because the result set will also be large in these cases.

For complex use cases like these, where having one huge index leads to poor results, the concept of index composability offers a solution.

Software engineers can then choose the most suitable index type(s) for their problem and build a composable graph from multiple indices.

List Index

A List Index is a list-based data structure that takes documents as input and breaks them up into small document chunks.

Uses the create and refine paradigm to construct an initial answer using the first text chunk.

The answer undergoes further refinement by using subsequent text chunks as context. This iterative process of feeding in additional information can enhance the accuracy and depth of the answer, allowing for a more comprehensive and context-aware response.

Does not use an LLM during index construction.

Vector Store Index

A Vector Store Index is built on top of a vector store such as, for example, ChromaDB.

It uses the LLM model to create the embeddings during index construction.

Tree Index

The Tree Index is a tree-structured index in which each node serves as a summary of its corresponding children nodes. This tree-based organization allows for efficient navigation and retrieval of information, with each parent node encapsulating a concise representation of its child nodes' content.

During querying the tree of indices is traversed to construct an answer.

A tree index is useful for summarizing a collection of documents.

Composable Graph Index

In a Composable Graph Index, you can specify one index as the root and specify separate children indices. Each child index should have a summary.

During querying the graph of indices is traversed recursively to construct an answer.

Knowledge Graph Index

Uses GPT with a keyword extraction prompt to extract keywords in the form of triplets, in the form of (subset, predicate, object), from document chunks during index creation.

During querying, extracts a set of relevant keywords from the query using a keyword extraction prompt. The retrieved chunks are then ranked based on the number of matches with the keywords, ensuring the most relevant results are prioritized.

Constructs the answer using the create and refine paradigm.

Similar to a hash table. Also referred to as keyword-table index.

SQL Index

The SQL Index allows you to connect your database data to an LLM and ask questions about the data.

Pandas Index

The Pandas Index allows you to connect pandas to an LLM and ask questions about the data.

Retriever

In order to retrieve your data (as nodes) from an index, LlamaIndex uses retrievers. LlamaIndex supports many types of retrievers, including the following:

Vector Store Retriever

The Vector Store Retriever fetches the top-k most similar nodes from a vector store index.

List Retriever

You can use the List Retriever to fetch all nodes from a list index. This retriever supports two modes: default and embeddings.

Tree Retriever

The Tree Retriever, as its name suggests, extracts nodes from a hierarchical tree of nodes. This retriever supports many different modes, the default is select_leaf.

Keyword Table Retriever

The Keyword Table Retriever extracts keywords from the query and uses them to find nodes having matching keywords.

The retriever supports three different modes: default, simple, and rake.

Knowledge Graph Retriever

The Knowledge Graph Retriever fetches nodes from a hierarchical tree of nodes.

It supports keywords, embeddings, and hybrid mode. Hybrid mode uses both keywords and embeddings to find relevant triplets.

Query Engine

A query engine processes a given query and generates a response by utilizing a retriever and a response synthesizer.

The query engine generates a response that includes the answer along with the sources of the answer (nodes).

Retriever Query Engine

The Retriever Query Engine is uses a retriever and a response synthesizer class to generate the response.

Graph Query Engine

The Graph Query Engine can parse a composable graph of indices and is able to use a different query engine for each subindex.

Multistep Query Engine

The Multistep Query Engine seeks to answer a question by breaking it down into subquestions, which are answered sequentially until no further questions remain. This query engine utilizes another query engine and query transformations to generate the subquestions. A stop function is used to tell the query engine when to stop asking questions.

Transform Query Engine

The Transform Query Engine changes the original query before passing it to the query engine.

Supports many types of query transformations, including, for example Hypothetical Document Embeddings (HyDE).

Router Query Engine

The Router Query Engine selects one query engine out of a list of query engines. The selection is made based on the query engines' metadata and the query itself.

Sub-Question Query Engine

The Sub-Question Query Engine takes a complex query and breaks it down into sub questions.

The final answer is generated from the answer to all sub-questions.

Response Synthesizer

A response synthesizer takes a list of nodes as input and generates output in the form of a response.

LlamaIndex supports different modes of response synthesis, including:

Refine

An iterative way of generating a response that uses all nodes. The initial answer is generated using the first node as context. This answer and the second node are then fed as context to a refinement prompt. This process continues until all nodes have been processed in the same way and the final response has been generated.

Compact and Refine

Same as refine, except the text chunks are merged into larger chunks to optimize cost and performance.

Tree Summarize

Tree summarization is a bottoms-up approach to constructing a response from a list of nodes. It involves generating a summary and parent node by combining every two nodes until only one answer remains.

Simple Summarize

Combines all text chunks and makes one LLM call using the whole text as context.

Query Transformation

LlamaIndex supports automatic prompt engineering techniques that aim to improve the prompt, in a single step or multiple steps, in order to obtain a better answer from the LLM. This includes, for example, the following techniques:

HyDE (Hypothetical Document Embeddings)

The Hypothetical Document Embeddings (HyDE) query transform improves accuracy by generating a hypothetical document/answer as explained in the original paper, published by Luyu Gao in 2022:

While dense retrieval has been shown effective and efficient across tasks and languages, it remains difficult to create effective fully zero-shot dense retrieval systems when no relevance label is available. In this paper, we recognize the difficulty of zero-shot learning and encoding relevance. Instead, we propose to pivot through Hypothetical Document Embeddings~(HyDE). Given a query, HyDE first zero-shot instructs an instruction-following language model (e.g. InstructGPT) to generate a hypothetical document.

Our experiments show that HyDE significantly outperforms the state-of-the-art unsupervised dense retriever Contriever and shows strong performance comparable to fine-tuned retrievers, across various tasks (e.g. web search, QA, fact verification) and languages~(e.g. sw, ko, ja)

Single-Step Query Decomposition

In Single-Step Query Decomposition, the original query is broken down into two or more subquestions that are answered separately and used to generate the final answer. This works with questions that, for example, compare two things.

Multi-Step Query Transformations

In Multiple-Step Query Decomposition, the original query is broken down into subquestions that are answered sequentially, one after the other; the final answer is generated from these answers.

Post Processors

The LlamaIndex post-processor functionality enables filtering and augmentation of search results (nodes) before sending them to the response synthesizer. For instance, you can require specific keywords to be absent or present in the retrieved nodes. You can also rank results based on attributes such as time.

The query engine handles post-processing of nodes retrieved from the index. There are many types of post-processors, including the following:

SimilarityPostprocessor

Allows you to require the retrieved nodes to have a minimum similarity score.

KeywordNodePostprocessor

Allows you to require certain keywords to be present in nodes.

PrevNextNodePostprocessor & AutoPrevNextNodePostprocessor

The PrevNextNodePostprocessor and AutoPrevNextNodePostprocessor both fetch additional nodes (previous, next, both).

FixedRecencyPostprocessor

If the query involves a time factor (temporal query), this post processor orders the nodes by date and returns the first k nodes to the response synthesizes.

EmbeddingRecencyPostprocessor

The EmbeddingRecencyPostprocessor is similar to FixedRecencyPostprocessor, but filters out nodes that have a high embedding similarity with the current node.

TimeWeightedPostprocessor

The TimeWeightedPostprocessor ranks the nodes by their recency.

PIINodePostprocessor

The PIINodeprocessor masks Personally Identifiable Information (PII) in the text using a local LLM such as StableLM. For example, replaces a credit card number with [CREDIT_CARD_NUMBER].

NERPIINodePostprocessor

The NERPIINodePostprocessor uses an LLM or a Hugging Face NER model for PII Masking. For example, replaces an organization name with [ORG_1].

CohereRerank

The CohereRank post processor uses an external service to rerank and improve the search results.

LLMRerank

The LLMRerank post processor uses an embeddings retriever and an LLM to rerank the results.

Response Evaluation

The quality of the LLM's response can be evaluated using tools provided by LlamaIndex. This allows us to choose the most suitable language model and configuration for our problem, and minimize the number of errors and hallucinations.

In LlamaIndex, The Playground module offers an automated method for testing your data across a wide range of combinations involving indices, models, embeddings, modes, and more.

LlamaIndex also has an evaluation module that allows us to evaluate the response to see if we got a relevant response from the language model.

The response from a query engine always includes both the response text and the source documents. This allows us to evaluate the response with regards to the input query and source documents.

The response evaluator uses a LLM model, such as, GPT-4 to evaluate if the response answers the question.

There are two evaluation modes in LlamaIndex:

Response and Context Evaluation

The response and context evaluation mode evaluates the correctness of the response against the context, in other words, the source nodes. This means the response is considered to be correct, if the retrieved context nodes match the response.

If the response does not match the retrieved source, the LLM model is probably hallucinating.

Query, Response, and Source Context Evaluation

When using query, response, and source context evaluation, the correctness of the response is evaluated

The Sources Evaluator will look at each source node in the results and see if the text matches the query.

Cost & Performance Optimization

Cost and performance optimization are important considerations when building LLM-based applications.

For instance, ChatGPT charges by usage (e.g., tokens), so it makes sense to try to minimize the number of tokens used by your application. With LlamaIndex's optimizer features, such as the Token Predictor, you can optimize costs by predicting and minimizing the number of tokens sent to ChatGPT.

Playground Model

Costs and performance can be optimized through various configuration options, such as selecting the appropriate model. The Playground module offers a method for automatically testing your data using different combinations of configuration settings, which include indices, models, embeddings, retriever modes, and more.

Cost Predictor

LlamaIndex's cost predictors help estimate the expenses associated with using language models such as OpenAI's GPT models.

Each interaction with a LLM model incurs a cost - for example, OpenAI's Davinci costs $0.02 per 1,000 tokens at the time of writing (2023). The cost associated with building an index and querying depends on many factors, including:

- The LLM used, e.g., GPT-4 is more expensive than GPT-3

- The size of the data, i.e., the number of tokens used during indexing

- The size of the prompt, i.e., the number of tokens used during prompting

Storage Context

LlamaIndex storage context features allows for the reuse of nodes between multiple indices, thus lowering costs by preventing duplication of data across indices.

Sentence Embedding Optimizer

The Sentence Embedding Optimizer optimizes costs by shortening the input text.

Conclusion

LlamaIndex offers a comprehensive toolset for processing and indexing your data, as well as optimizing the performance, quality, and cost-efficiency of your Large Language Model-based applications.

While implementing your own toolset is possible, understanding the concepts and techniques underlying LlamaIndex is beneficial, especially if you are new to the field of Large Language Models (LLMs).